How Google Discover REALLY Works

Breaking down Discover alongside the Google Leak to show you exactly how I think the black box really works

This is all based on the Google leak and tallies up with my experience of content that performs in Discover. I have pulled out what I think are the most relevant Discover proxies and grouped them into what seems like the appropriate workflow.

Like a disgraced BBC employee, thoughts are my own. Not a perfect science.

TL;DR

Your site needs to be seen as a ‘trusted source’ with low SPAM, evaluated by a variety of proxies like site, author and trust scores in order to be eligible

I think Discover is driven by a six-part pipeline, using good vs. bad clicks (long dwell time vs. pogo-sticking) and repeat visits to continuously score and re-score content quality

Fresh content gets an initial boost. Success hinges on a strong CTR and positive early-stage engagement (good clicks/shares from all channels count, not just Discover).

Content that aligns with a user’s interests is prioritised. To optimise, focus on your areas of topical authority, use a compelling headline(s), be entity-driven and use large (1200px+) images.

Updated: I had used the wrong names for Google Leak proxies thanks to a touch of carelessness and a malfunctioning database and custom GPT. These have now been updated. Thank you to Johan Hülsen for pointing this out.

There are dozens of potential proxies that Google likely uses to guide satiate the doomscrollers desperate need for quality content in the Discover feed. It’s not that different to how traditional Google search works.

But traditional search (a high-quality pull channel) is worlds apart from Discover. Audiences killing time on trains. At their in-laws. The toilet. Given they’re part of the same ecosystem, they’re bundled together into one monolithic entity.

And here’s how it works.

Google’s Discover guidelines

This section is boring and Google’s guidelines around eligibility are exceptionally vague:

Content is automatically eligible to appear in Discover if it is indexed by Google and meets Discover’s content policies

Any kind of dangerous, spammy, deceptive or violent/vulgar content gets filtered out

“…Discover makes use of many of the same signals and systems used by Search to determine what is… helpful, reliable, people-first content.”

Then they give some solid, albeit beige advice around quality titles - clicky, not baity as John Shehata would say. Ensuring your featured image is at least 1200px wide and creating timely, value-added content.

But we can do better.

Discover’s six part content pipeline

From cradle to grave, let’s review exactly how your content does or in most cases doesn’t appear in Discover. As always remembering I have made these clusters up, albeit based on real Google proxies from the Google Leak.

But they seem to tally very well from my (and others) experience of the platform.

Eligibility check and baseline filtering

Initial exposure and testing

User quality assessment

Engagement and feedback loop

Personalisation layer

Decay and renewal cycles

Eligibility and baseline filtering

For starters, your site has to be eligible for Google Discover. This means you are seen as a ‘trusted source’ on the topic and you have a low enough SPAM score that the threshold isn’t triggered.

There are a few primary proxy scores I suspect account for eligibility and baseline filtering. Before your site can feature on the platform, it has to hit a few basic criteria.

siteAuthority, trust or trustedScore: scores that evaluates a site’s reliability and reputation and form the basis of eligibility filtering at a platform and topic level.

topicalityScore: a higher topicality score that helps Discover identify trusted sources at the topic level

isAuthor and authorObfuscatedGaiaStr help Google identify authoritative sources alongside site level metrics.

The site’s reputation and topical authority are ranked for the topic at hand. These three metrics help evaluate whether your site is eligible to appear in Discover. We know authorship is scored and matters too. We just don’t know to what extent.

You can find out more about SPAM proxies like SpamRank or GibberishScore here.

Initial exposure and testing

This is very much the freshness stage, where fresh content is given a temporary boost (because contemporary content is more likely to satiate a dopamine addicted mind).

browsyTopic: Based on a lot of prior data, a Discover-worthy topic can be identified using an experimental topic score

isHotDoc: Extremely new articles around trending topics get a short term ranking boost.

freshnessboxArticleScores: helps Google prioritise more recent articles for time sensitive queries. Ditto contentfreshness.

goldmineTrustFactor: Google uses this to prioritise articles and titles with a good reputation and history of reliability. See Shaun Anderson’s excellent work on this.

I would hypothesise that using a Bayesian style predictive model, Google applies learnings at a site and subfolder level to predict likely CTR. The more quality content you have published over time (presumably at a site, subfolder and author level), the more likely you are to feature.

Because there is less ambiguity. A key feature of SEO now.

User quality assessment

An article is ultimately judged by the quality of user engagement. Google has used the good and bad click style model from Navboost to establish what is and isn’t working for users for years.

Low CTR and/or pogosticking style behaviour downgrades an article’s chance of featuring. No reason to think any different in Discover.

unsquashedClicks and impressions: Data that suggests a high CTR would work well for a person and cohort of users. See also dailyClicks.

clicksTotalInterval: Total number of clicks received within a specific time period. For Discover, I suspect this is compressed.

imageClickRank: Interesting to note Navboost stores image data. As these models have some pretty advanced reasoning, not unreasonable to suspect they know what type of headline and image combo will perform well.

The quality of any article is measured by its user engagement. Cohorts of users can be grouped together to form effective personalisation testing. People with the same general interests are served the content if they should be interested.

Speed and scale can be achieved on Discover far more rigorously.

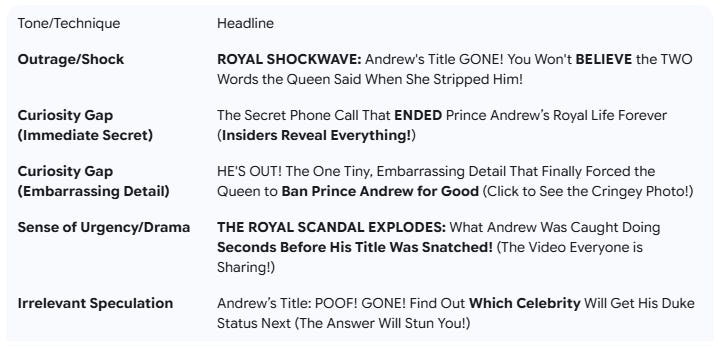

But if the overly clicky or misleading title delivers poor engagement (dwell time and on page interactions), then the article may be downgraded. Over time this kind of practice can compound and nerf your site completely.

Important to note that this click data doesn’t have to come from Discover. Once an article is out in the ether - it’s been published, shared on social etc - Chrome click data is stored and is applied to the algorithm.

So the more quality click data and shares you can generate early in an article’s lifecycle (accounting for the importance of freshness), the better your chance of success on Discover.

Treat it like a viral platform. Make noise. Do marketing.

Engagement and feedback loop

Once the article enters the proverbial fray, a scoring and rescoring loop begins. Continuous CTR, impressions, interactions and click quality feed models like Navboost to refine what gets shown.

Valuable content is decided by the good vs bad click ratio. Quality visits are used to measure lasting satisfaction and re-rank top performing content.

lastLongestClick: likely measures user satisfaction at a topic and cohort level in Discover

clicksBad and clicksGood: once it has aggregated enough data, it can make a distinction at an article level for good vs bad click data.

Google uses re-ranking functions called Twiddlers including a FreshnessTwiddler to boost newer content in traditional search.

In Discover this is all quite simple. Apply impression and click data at a topic and cohort level over a compressed period of time. Voila.

These behavioural signals define an article’s success. It lives or dies on relatively simple metrics. And the more you use it, the better it gets. Because it knows what you and your cohort are more likely to click and enjoy.

I imagine headline and image data are stored so that the algorithm can apply some rigorous standards to statistical modelling. Once it knows what types of headlines, images and articles perform best for specific cohorts, personalisation becomes effective faster.

Personalisation layer

Now I don’t want to panic you, but Google knows a lot about us.

It collects a lot of non-anonymised data (credit card details, passwords, contact details etc) alongside every conceivable interaction you have with web pages. It knows what we care about in the moment and how we’ve evolved over time.

Discover takes personalisation to the next level. I think it may offer an insight into how part of the SERP could look like in the future. A personalised cluster of articles, videos and social posts designed to hook you in embedded somewhere alongside search results and AI Mode.

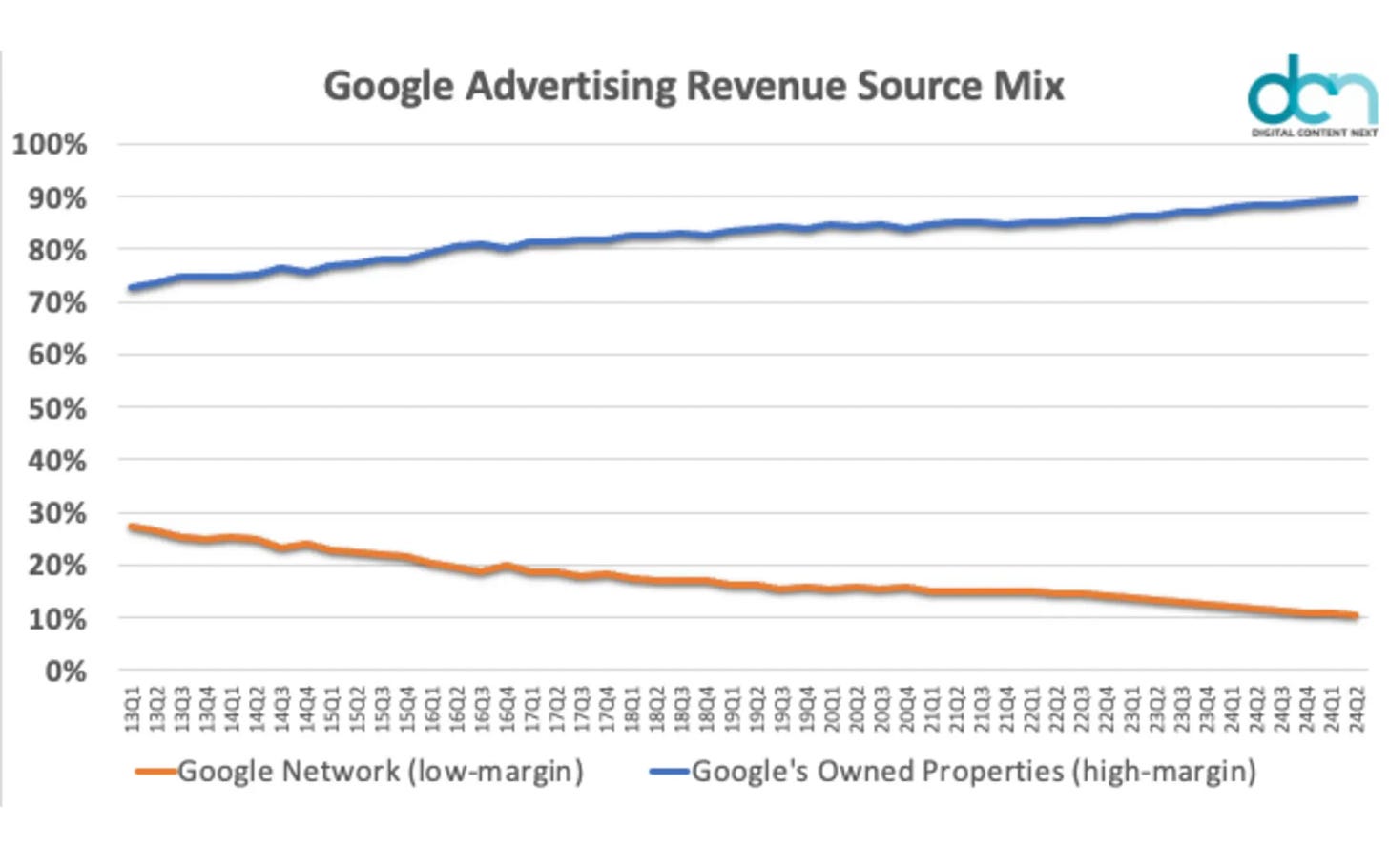

All of this is designed to keep you on Google’s owned properties for longer. Because they make more money that way. And they have the click data in Discover to do it,

It starts getting a bit messy with specific peronalisation modules from the leak. Fair to say that the below help the algorithm improve user experience by leveraging past interactions and experiences.

personalizationGameWebEventsConsolidatedEvents

copleySourceTypeList

RepositoryWebRefPersonalizationContextOutputs

socialgraphNodeNameFp

If you search for things from or about specific people, places or organisations (entities!) more frequently, that will be taken into account. Think of everything you do as a fragment of your digital fingerprint. One Google almost monopolistically owns.

In search at least!

Content that matches well with your personal and cohort’s interest will be boosted into your feed.

You can see the site’s you engage with frequently using the site engagement page in Chrome (from your toolbar: chrome://site-engagement/) and every stored interaction with histograms. This histogram data indirectly shows key interaction points you have with web pages, by measuring the browser’s response and performance around those interactions.

It doesn’t explicitly say user A clicked x, but logs the technical impact. I.e. how long did the browser spending processing said click or scroll.

Decay and renewal cycles

Discover boosts freshness because people are thirsty for it. By boosting fresh content, older or saturated stories naturally decay as the news cycle moves on and article engagement declines.

For successful stories in Discover, this is probably through market saturation.

Google stores substantial date data in its PerDocData (Google’s leaked core document model). This could be a applied at a topic and entity level in Discover.

timeSensitivity is also stored to help identify content where recency and freshness is more important.

And the NLPSemanticParsingModel stores the publication time in a direct effort to prioritise fresher content on topics specifically from news sites.

None of us spend time in Google. It’s not fun. It isn’t designed to hook us in and ruin our attention spans with constant spiking of dopamine.

But Google Discover is clearly on the way to that. They want to make it a destination. A social network. hence all the recent changes where you can ‘catch up’ with creators and publishers you care about across multiple platforms. Videos, social posts, articles… The whole nine yards.

I wish they’d fuck off with summarising literally everything with AI however.

My 11 step workflow to get the most out of Google Discover

Follow basic principles and you will put yourself in good stead. Understand where your site is topically strong and focus your time on content that will drive value. Multiple ways you can do this.

If you don’t feature much in Discover, you can use your Search Console click and impressions data to identify areas where you generate the highest value. Where you are topically authoritative. I would do this at a subfolder and entity level (e.g. politics and Rachel Reeves or the Labour Party).

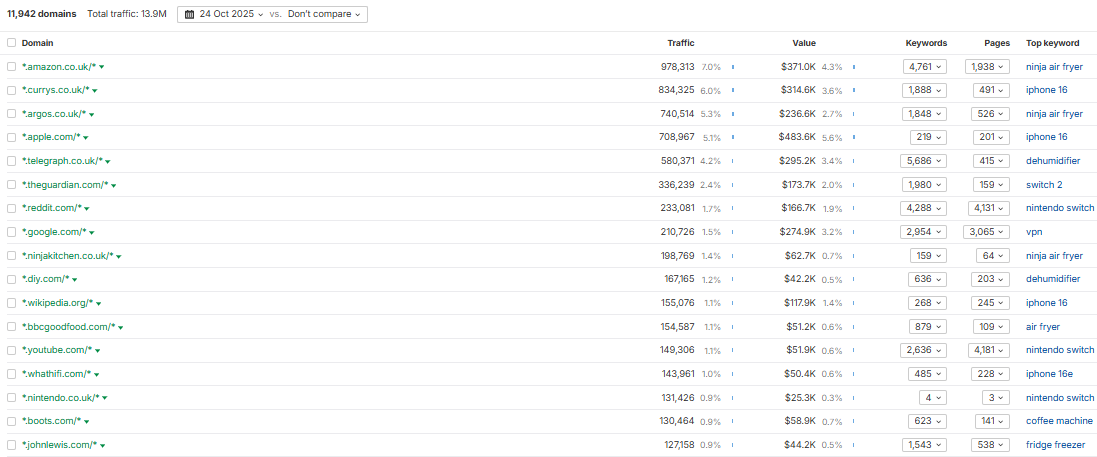

Also worth breaking this down in total and by article. or you can use something like Ahrefs’ Traffic Share report to determine your share of voice via third party data.

Then really focus your time on a) areas where you’re already authoritative and b) areas that drive value for your audience.

Assuming you’re not focusing on NSFW content and you’re vaguely eligible, here’s what I would do:

Make sure you’re meeting basic image requirements. 1200 pixels wide as a minimum.

Identify your areas of topical authority. Where do you already rank effectively at a subfolder level? Is there a specific author who performs best? Try to build on your valuable content hubs with content that should drive extra value in this area.

Invest in content that will drive real value (links and engagement) in these areas. Do not chase clicks via Discover. It’s a one way ticket to clickbait city. It’s shit there.

Make sure you’re plugged into the news cycle. Being first has a huge impact on your news visibility in search. If you’re not first on the scene, make sure you’re adding something additional to the conversation. Be bold. Add value. Understand how news SEO really works.

Be entity driven. In your headlines, first paragraph, subheadings, structured data and image ALT text. Your page should remove ambiguity. You need to make it incredibly clear who this page is about. A lack of clarity is partly why Google rewrites headlines.

Use the Open Graph title. The OG title is a headline that doesn’t show on your page. Primarily designed for social media use it is one of the most commonly picked up headlines in Discover. It can be jazzy. Curiousity led. Rich. Interesting. But still entity-focused.

Make sure you share content likely to do well on Discover across relevant push channels early in its lifecycle. It needs to outperform its predicted early stage performance.*

Create a good page experience. Your page (and site) should be fast, secure, ad-lite and memorable for the right reasons.

Try to drive quality onward journeys. If you can treat users from Discover differently to your main site, think about how you would link effectively for them. Maybe you use a pop-up ‘we think you’ll like this next’ section based on a user’s scroll depth of dwell time.

Get the traffic to convert. Whilst Discover is a personalised feed, the standard scroller is not very engaged. So focus on easier conversions like registrations (if you’re a subscriber first company) or advertising revenue et al.

Keep a record of your best performers. Evergreen content can be refreshed and repubbed year after year. It can still drive value.

*What I mean here is if your content is predicted to drive three shares and two links, if you share it on social and in newsletters and it drives seven shares and nine links, it is more likely to go viral.

As such, the algorithm identifies it as ‘Discover-worthy.’

Anyway that’s it, let me know what you think in the comments!