Google's Evergreen Article Scoring System Uncovered

A patent breakdown that shows exactly how in-depth articles are scored and why authorship and site structure really matter

Back to some good ol’ fashioned SEO.

Google has a patent called Surfacing In-Depth Articles in Search Results. It’s a gold mine. Really. Full of indexes I didn’t know existed. Author, relevancy, article and site-pattern level scoring.

The whole nine bloody yards.

Sidenote, but I love how these patent naming conventions - US20150379140A1 - make them sound like US naval destroyers. Big, bold and brash they are not.

I first saw this patent from a post from Koray Tugberk (Holistic SEO). Well worth a follow.

TL;DR

The system creates a separate index for quality, long-form content called the In-Depth Article Index

There’s a two-tiered scoring system for in-depth articles: a pre-calculated In-Depth Article Score and a real-time Topicality Score

Authorship and subfolder performance really matter. Authors and subfolders with strong evergreen-like metrics will (theoretically) boost performance

Consistent link acquisition over time is far more important than a one-off burst. Truly evergreen content should naturally accrue the right signals over time

What does this patent do?

It describes how Google identifies and surfaces the best in-depth articles in search results. It’s not just about traditional relevance signals. High-quality, long-form content demands more. Content that stands head and shoulders above the rest because it needs to.

The user’s query is nuanced.

Much like bloody Starmer’s Britain, there is a two-tiered scoring system. One that identifies the true quality of an article and then ranks it based on its relevance to a query. An entity, even.

This scoring system helps Google create a dedicated index for queries and topics. Each article is then ranked for a given query using a new metric - the Document Score.

Instead of treating all content equally, the system creates a standalone index of these ultra detailed articles. When a user searches for a query that demands depth, it is used.

What constitutes an (quality) in-depth article?

It’s not length, I’ll tell you that much.

I wrote in-depth (ironically) about how to write great content. I don’t think there’s much separation between the two.

Great content requires an appropriate level of depth. Proper exploration. It must;

Answer the question(s) (and the appropriate follow-ups)

Demonstrate obvious expertise and trust

Hit three of the four E’s to truly resonate with the audience

Be unique and original (in some way). Incredibly well researched. Owned data, analysis, visuals etc.

Be remembered for the right reasons

Be well structured and have the approprioate level of depth

For all of this to work. For in-depth content to fly, it has to engage. It has to be shared. SEO-wise, it needs to accrue links at a reasonable velocity over time. That’s one of the fundamental concepts of this patent.

You can’t do that with slop. The pig’s in the trough but he wants a change. Your regurgitated fermented grain mash isn’t cutting the proverbial mustard.

It is all about adding value.

Unless you can buy aged or expired domains and spin up casino content on them. Then who cares? You don’t need me.

Key frameworks and scores

The patent revolves around three key frameworks and scores.

The In-Depth Article Score

A primarily pre-calculated, document-specific score that measures an article's perceived quality. Perceived by a robot, but perceived nonetheless. Independent of any specific search query.

It’s designed to calculate whether your pitiful article is worthy of inclusion in the In-Depth Article Index.

Think of it like Epstein Island or a Diddy Party. Only the select few make it. Then claim they were never really friends and become President of the US or a disgraced member of the Royal Family.

This composite score is derived from five others.

Article Score: The system looks for long-form content with a high word count and longer paragraphs. Something that resembles a narrative via coverage, structure and length rather than a straight answer.

Commercial Score: If the commercial score is too high, the article is filtered out of the index. Commercial phrases are a dead giveaway (offer, sale, or discount prices). As are interactive elements like credit card fields.

Evergreen Score: Is the article adding value months or years after publication? A steady stream of links over a long period suggests sustained interest. For newer articles, Google use a Bayesian-type predictive model, using factors like word count (lol) and early link growth to establish its potential.

Site Pattern Score: This is a probability score designed to estimate the long-term value of an article based on previous subfolder performance. For example, articles published under a /travel/ directory have a higher probability of being evergreen than those under a /news/ directory. Evergreen content that sits under /news/ will be suppressed compared to /travel/. Theoretically.

Author Score: Authors hate being judged. Especially by machines. The system tracks the author’s history across documents and can assign weight if they have a track record of producing in-depth content. Authorship really does matter, particularly for evergreen content.

If you’re ever considering where evergreen content should sit for maximum effect, remember that the system learns statistical associations between URL paths and evergreen content. So evergreen content migrations can be clearly explained. As can author performance.

How conceptual models can help you (easily) create better content

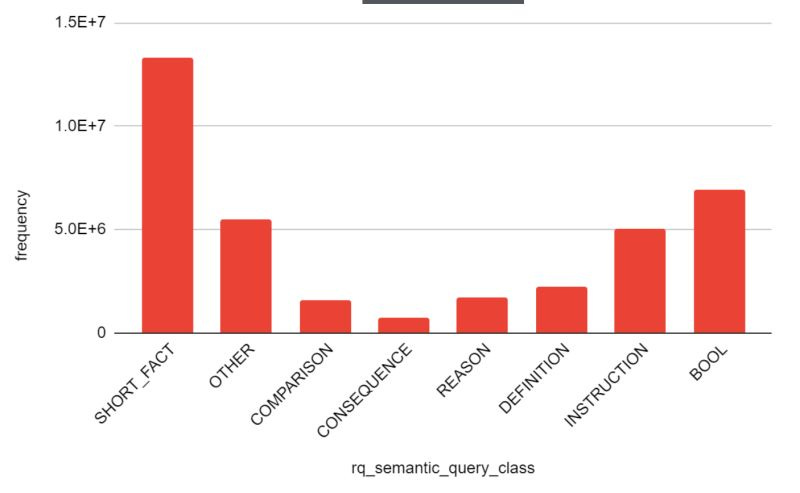

Towards the back end of last year, Mark Williams-Cook and the team at Candour uncovered one of the more thought provoking tidbits in recent times. Hidden within 2TB of data, 90 million search queries, and 2,000 properties lies a glimpse into Google’s thought process.

The Topicality Score

The Topicality Score is a relevance score that is (mostly) calculated in real-time, for each query. It measures how relevant each in-depth article is to that particular term.

Topics and entities associated with the user's query are compared to those found within the in-depth article. A higher degree of topical overlap results in a higher score.

Nothing new there.

It acts as a bridge between the user's immediate information need and the higher-quality content that exists in the specialised index.

Think of topical authority at an article level. Topical coverage. Query fanning. Semantic SEO. Whatever drivelling buzzword you want to use.

Have you answered all of the relevant queries your audience has to offer here?

Do you understand them well enough to know what they want to know on each page?

Does it fit with with the wider search narrative?

The Document Score

The final boss. The Document Score.

You’ve created a supposedly in-depth article. At least by your own appalling standards. Now your document gets scored. Judged by a predetermined set of predictive analytics.

It is the final ranking used to select which in-depth articles to display. It is the definitive metric for a given search query.

It is calculated by combining the article's pre-existing IDA Score with its real-time Topicality Score for the given query. The Document Score can be a sum or product of the two scores.

The Document Score ensures that the in-depth articles displayed are both high-quality (high IDA Score) and highly relevant to the user's query (high Topicality Score).

This two-part approach prevents low-quality but relevant content from being shown, while also preventing high-quality but irrelevant content from being ranked.

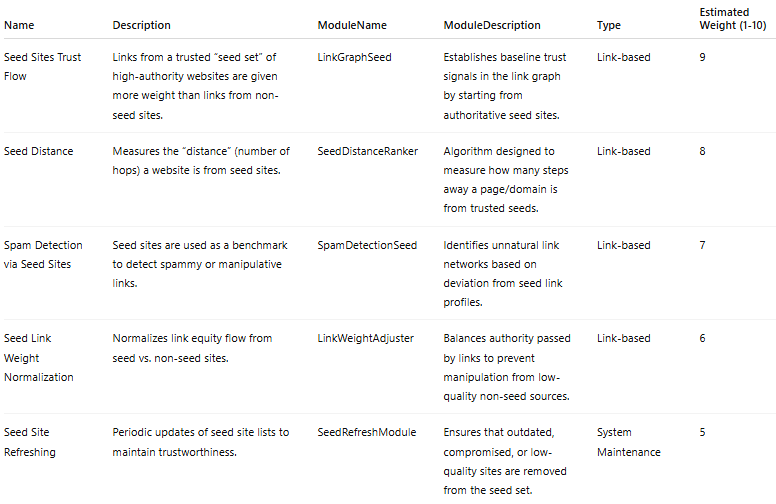

How the IDA Search Index is Created

Like a hangover recovery post 30, it is a multi-step process. One with slightly less groaning and electrolytes.

Stellar sources are identified: A set of seed websites - publishers known for high-quality, in-depth content - are identified. I suspect at a topic/query level. Probably driven by link acquisition, mentions and user engagement over time. Possibly also accreditations and authorship.

Based on the seed sites, target sites are then identified to expand the pool of trusted sources. Websites with similar anchor text profiles are considered topically similar. Low-quality seed websites, known for their commercial or low-quality content, are removed. This creates the final set of target websites to be analysed.

Process and score content: Once the target websites are identified, their content is analysed to determine an IDA Score for each article. Only content that passes a certain threshold is added to the dedicated index.

I don’t know exactly what the threshold is, but the concept is sound.

Identify a quality target website

Remove spam

Score content

Create index

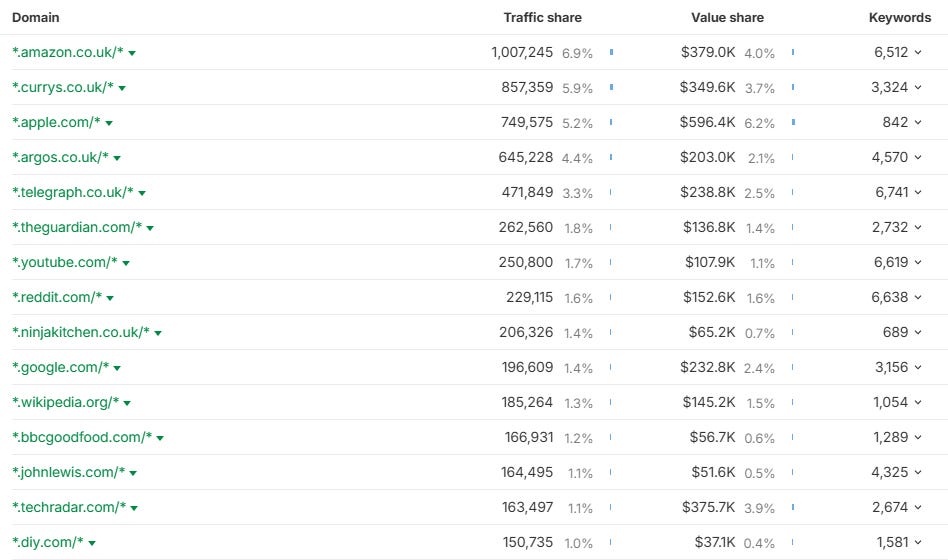

We have a pretty good idea of what the seed websites are based on link acquisition and traffic/share of voice data per category (see below traffic share report). We also have this searchable database, which is a bit shit unfortunately.

And for publishers, I suspect making sure your audience selects you as a preferred source may have some positive ramifications. Never one to sniff at real user data.

How In-Depth Content is Ranked

When something is queried, the algorithm determines if in-depth article search results are required to satisfy the request. The majority of transactional or purely navigational queries don’t require an IDA.

If something can be zero-clicked, it will be. For people like us, the IDA index is where it’s at. If Google can answer the query itself, it will.

If one of the top-ranking general search results is from a stellar source, the system can use this as a trigger condition. But it’s not the only condition. In some implementations, in-depth articles may be triggered based on query type (intent-based, etc).

Triggered harder than Liz Truss seeing a cabbage, the system then ranks the articles from the IDA index using the Document Score. The higher the score, the higher the ranking.

Simple.

Worth noting that the IDA index is periodically updated. That means publishers can enter/exit the index as new content is published or as older content drops below thresholds. Not permanent inclusion. That would be stupid. And I can only count about 40 stupid things Google have done over the last year.

What it means for us

I don’t know if this changes anyone’s approach to evergreen content. For those of us who work for large publishers or on multiple products, your authorship and site structure really matter.

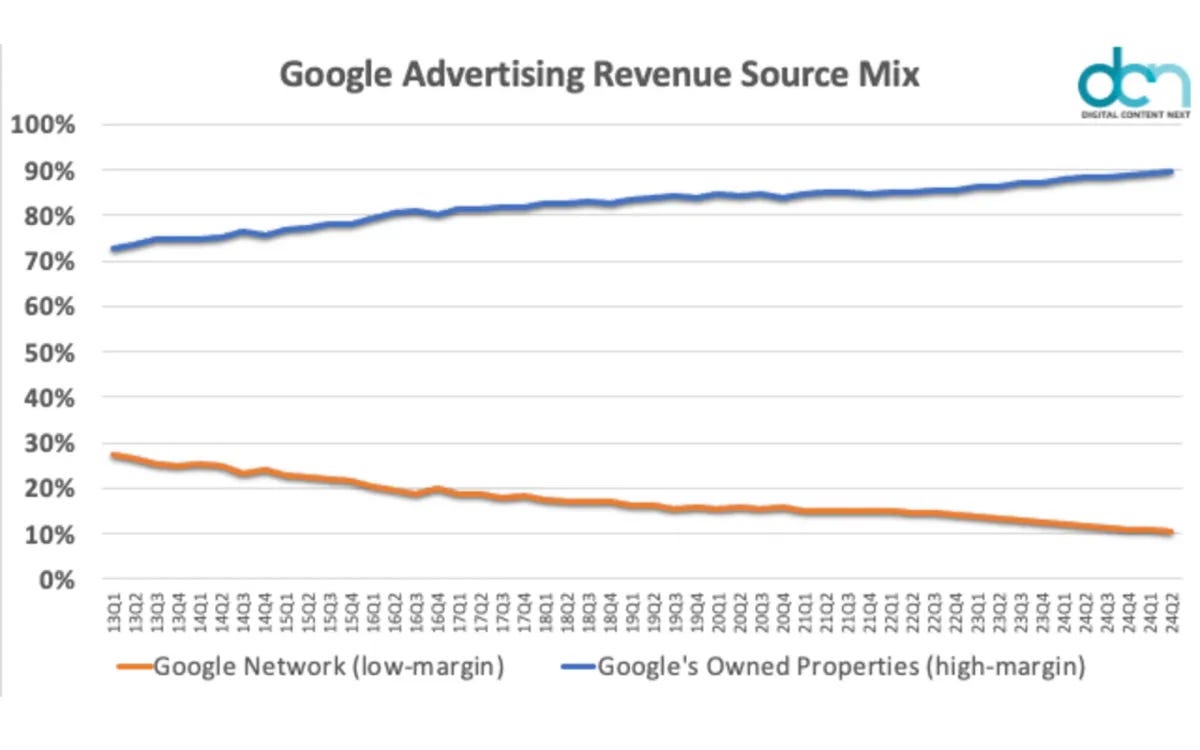

Understanding intent is more important than ever. And links. Links are still the foundation of ranking. The strongest trust signal available.

In-depth, evergreen content is so important that it has its own index

Quality is a quantifiable metric. Google actively quantifies and scores in-depth content for quality signals beyond the usual. It's not just about what you say, but how you say it (long-form, narrative style) and who you are (author credibility, publisher reputation).

Site architecture impacts evergreen performance. The Site Pattern Score proves that your site structure affects how Google perceives the quality and evergreen nature of that content.

Author performance is a ranking factor. The Author Score explicitly confirms that the system looks at the author's history and reputation. Because author and site history feed into IDA scores, a consistent body of evergreen, narrative work matters more than a single epic article.

Anchor text and link velocity matter. The Evergreen Score's reliance on anchor distribution and link velocity over time means a consistent stream of quality links is foundational.

Document Score vs Site Score. The final IDA Score includes the Site Pattern Score. The reputation of the site and its authors contribute directly to the IDA score, which in turn feeds into the Document Score. A dirty little nexus of sites, articles and authorship.

Commercialisation of informational evergreen content: Articles that veer into sales pitches will be excluded. Keep in-depth and commercial separate where possible.

While not explicit, user preferences/personalisation may influence which in-depth articles get surfaced. For example, if a user has a history of engaging with a source, that could weigh into the decision.

That’s it. Let me know what you think. You know where to find me.

Great guide, thanks! This also may explain why my content on Medium under my subfolder @ivanpalii ranks so well

Hmm, so i guess one basic thing to do is making sure evergreen articles are on a single url, instead of ‘chapters’ which many, VERY, thorough articles have