How people REALLY use LLMs and what that means for Publishers

How LLM usage has developed over time tells us a lot about what it means for publishers. The market's expanding, but we have to be better.

OpenAI released the largest study to date on how users really use ChatGPT. I have painstakingly synthesised the ones you and I should pay heed to, so you don’t have to wade through the plethora of useful and pointless insights.

TL;DR

LLMs are not replacing search. But they are shifting how people access and consume information

Asking (49%) and Doing (40%) queries dominate the market and are increasing in quality

The top three use cases - Practical Guidance, Seeking Information, and Writing - account for 80% of all conversations

Publishers need to build linkable assets that add value. It can’t just be about chasing traffic from articles anymore

Chatbot 101

A chatbot is a statistical model trained to generate a text response given some text input. Monkey see, monkey do.

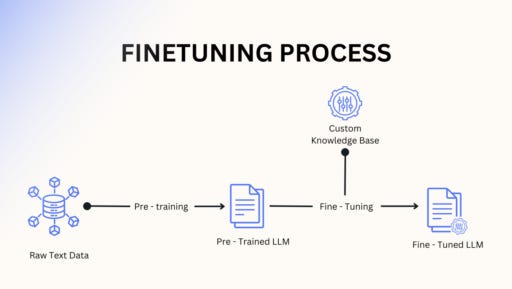

The more advanced chatbots have a two or more-stage training process. In stage one (less colloquially known as ‘pre-training’), LLMs are trained to predict the next word in a string.

Like the world’s best accountant, they are both predictable and boring. And that’s not necessarily a bad thing. I want my chefs fat, my pilots sober and my money men so boring they’re next in line to lead the Green Party.

Stage two is where things get a little fancier. In the ‘post-training’ phase, models are trained to generate ‘quality’ responses to a prompt. They are fine-tuned on different strategies, like reinforcement learning, to help grade responses.

Over time, the LLMs, like Pavlov’s dog, are either rewarded or reprimanded based on the quality of their responses.

In phase one, the model ‘understands’ (definitely in inverted commas) a latent representation of the world. In phase two, its knowledge is honed to generate the best quality response.

A quick word on temperature

Without temperature settings, LLMs will generate exactly the same response time after time, as long as the training process is the same.

Higher temperatures (closer to 1.0) increase randomness and creativity. Lower temperatures (closer to 0) make the model(s) far more predictive and precise.

So, your use case determines the appropriate temperature settings. Coding should be set closer to zero. Creative, more content-focused tasks should be closer to one.

I have already talked about this in my article on how to build a brand post AI. But I highly recommend reading this very good guide on how temperature scales work with LLMs and how they impact the user base.

What does the data tell us?

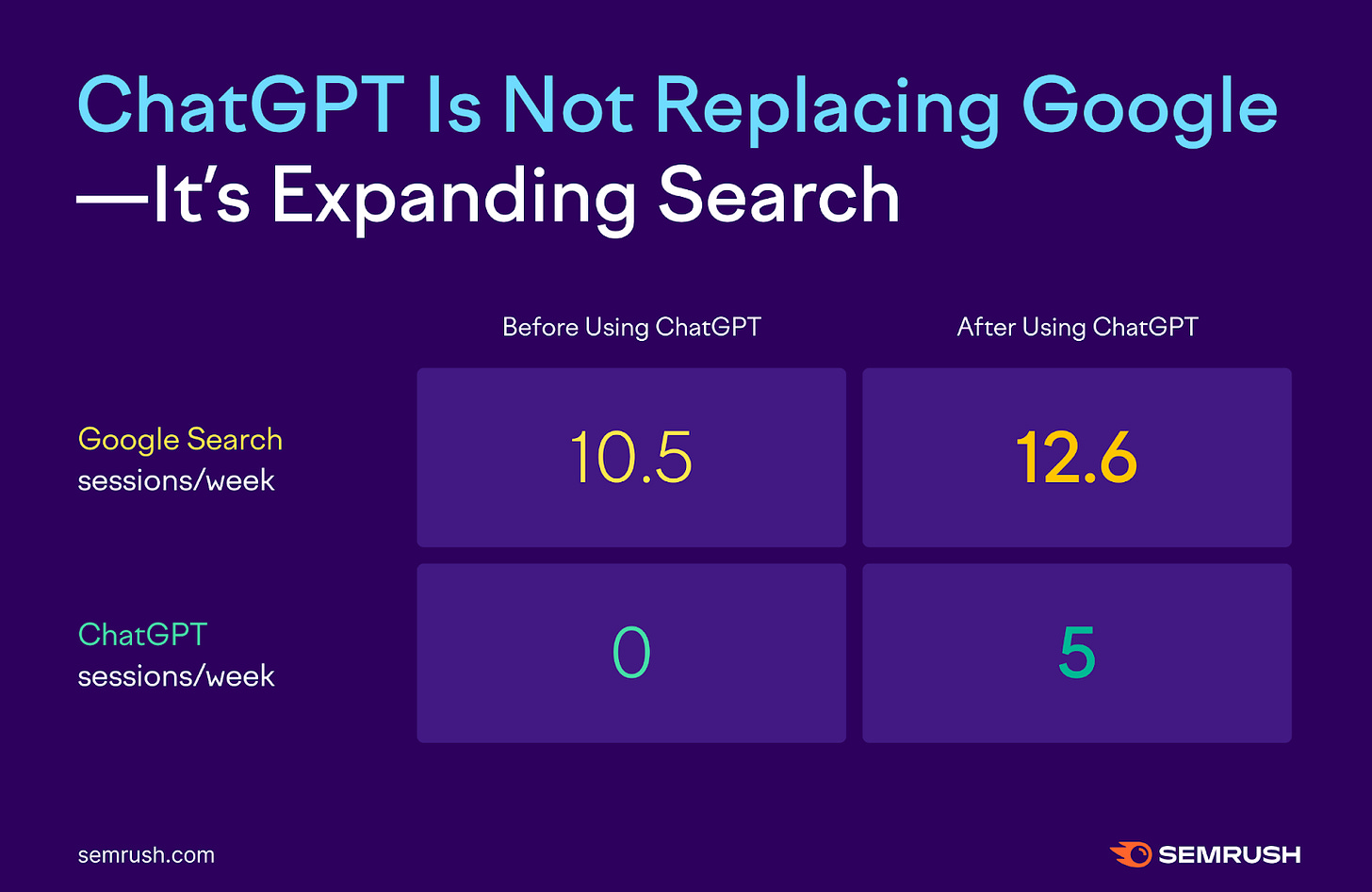

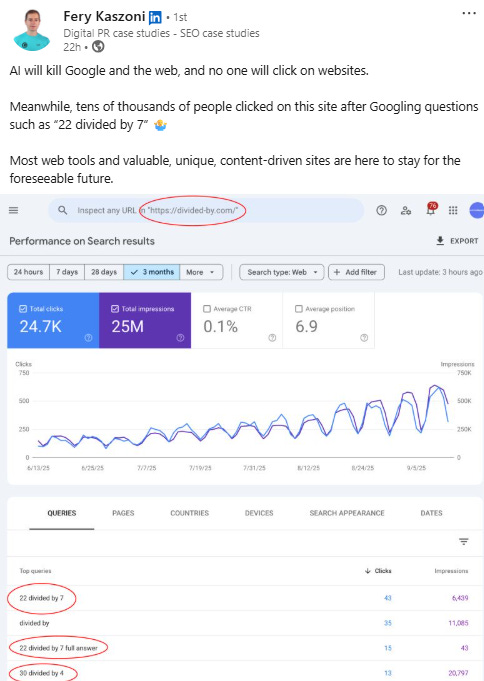

That LLMs are not a direct replacement for search. Not even that close IMO. This Semrush study highlighted that LLM super users increased the amount of traditional searches they were doing. The expansion theory seems to hold true.

But they have brought on a fundamental shift in how people access and interact with information. Conversational interfaces have incredible value. Particularly in a workplace format.

Who knew we were so fucking lazy?

1. Guidance, Seeking Information and Writing dominate

These top three use cases account for 80% of all human-robot conversations. Practical guidance, seeking information and please help me write something bland and lacking any kind of passion or insight, wondrous robot.

I will concede that the majority of Writing queries are for editing existing work. Still. If I read something written by AI, I will feel duped. And deception is not an attractive quality.

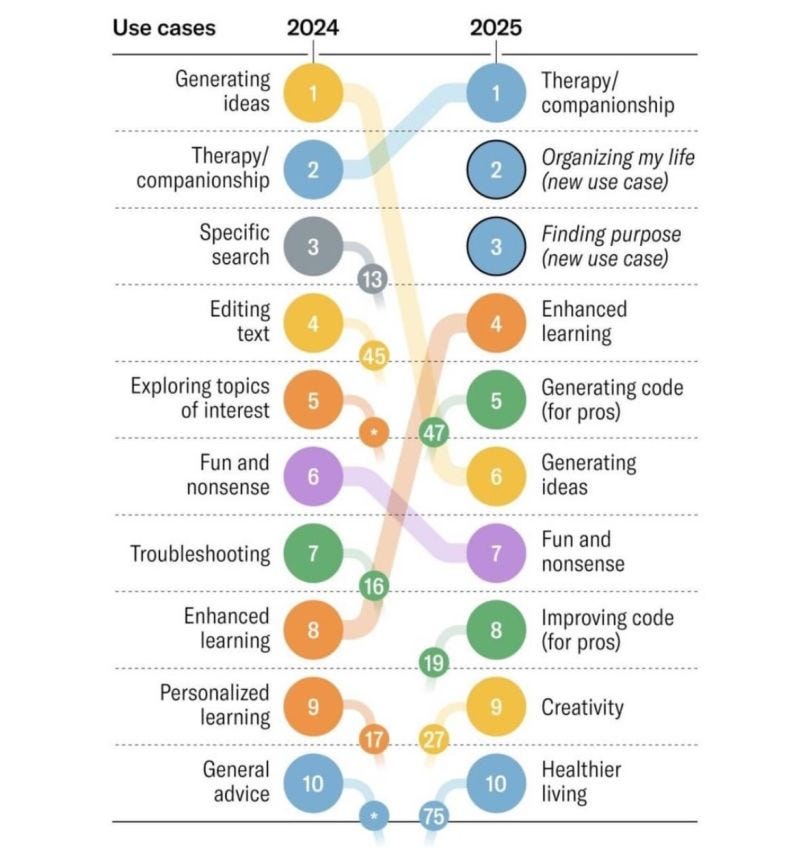

2. Non-work related usage is increasing

Non-work-related messages grew from 53% of all usage to more than 70% by July 2025.

LLMs have become habitual. Particularly when it comes to helping us make the right decisions. Both in and out of work.

3. Writing is the most common workplace application

Writing is the most common work use case, accounting for 40% of work-related messages on average in June 2025.

About two-thirds of all Writing messages are requests to modify existing user text rather than create new text from scratch.

I know enough people that just use LLMs to help them write better emails. I almost feel sorry for the tech bros that the primary use cases for these tools are so lacking in creativity.

4. Less so coding

Computer coding queries are a relatively small share, at only 4.2% of all messages*

This feels very counter-intuitive, but specialist bots like Claude or tools like Lovable are better alternatives

This is a point of note. Specialist LLM usage will grow and will likely dominate specific industries because they will be able to develop better quality outputs. The specialised stage two style training makes for a far superior product.

*Compared to 33% of work-related Claude conversations

It’s important to not that other studies have some very different takes on what people use LLMs for. So this isn’t as cut and dry as we think. I’m sure things will continue to change.

5. Men no longer dominate

Early adopters were disproportionately male (around 80% with typically masculine names)

That number declined to 48% by June 2025, with active users now slightly more likely to have typically feminine names.

Sure, us men have our flaws. Throughout history maybe we’ve been a tad quick to battle and a little dominating. But good to see parity.

6. Asking and Doing queries dominate

89% of all queries are Asking and Doing related

49% Asking and 40% Doing, with just 11% for Expressing

Asking messages have grown faster than Doing messages over the last year and are rated higher quality

7. Relationships and personal reflection are not prominent

There have been a number of studies that state that LLMs have become personal therapists for people (see above)

However, relationships and personal reflection only account for 1.9% of total messages according to OpenAI

8. The bloody youth (*shakes fist*)

Nearly half of all messages sent by adults were from users under the age of 26

This coincides perfectly with Kevin Indig’s research on how different demographic types consume AI Overviews. Young people are a) more likely to trust it and b) more likely to adopt it

Takeaways

I don’t think LLMs are a disaster for publishers. Sure, they don’t send any fucking referral traffic and have started to remove citations outside of paid users (classic). But none of these tech-heads are going to give us anything.

It’s a race to the moon and we’re the dog they sent on the test flight.

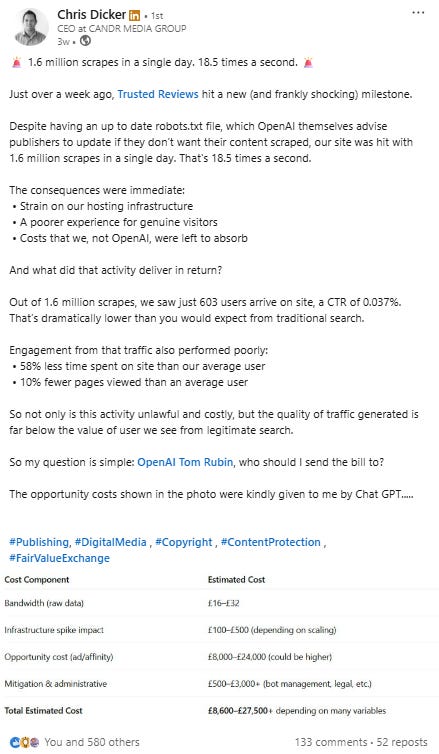

But if you’re a publisher with an opinion, an audience and - hopefully - some brand depth and assets to hand, you’ll be ok. Although their crawling behaviour is getting out of hand.

One of the most practical outcomes we as publishers can take from this data is the apparent change in intents. For eons we’ve been lumbered with navigational, informational, commercial and transactional.

Now we have Doing. Or Generating. And it’s huge.

SEO isn’t dead for publishers. But we do need to do more than just keep publishing content. There’s a lot to be said for espousing the values of AI, whilst keeping it at arm’s length.

Think BBC Verify. Content that can’t be synthesised by machines because it adds so much value. Tools and linkable assets. Real opinions from experts pushed to the fore.

Mastering EEAT for publishers

The SEO world’s favourite, ambiguous acronym unravelled before your very eyes.

But it’s hard to scale that quality. Programmatic SEO can drive amazing value. As can tools. Tools that answer users’ ‘Doing’ queries time after time. We have to build things that add value outside of the existing corpus.

And if your audience is generally younger and more trusting, you’re going to have to lean into this more.