Information Retrieval Part 3: Vectorisation and Transformers (not the film)

Retrieval systems haven't focused solely on keywords for a long time. They can and do 'understand' documents and items as part of the wider corpus. This is the vector model space in action.

Information retrieval systems are designed to satisfy a user. To make a user happy with the quality of their recall. It’s important we understand that. Every system and its input and outputs are designed to provide the best user experience.

From the training data to similarity scoring and machine’s ability to ‘understand’ our tired, sad bullshit - this is the third in a series I’ve titled, information retrieval for morons.

TL;DR

In the vector space model, the distance between vectors represents the relevance (similarity) between the documents or items

Vectorisation has allowed search engines to perform concept searching instead of word searching. It is the alignment of concepts, not letters or words

Longer documents contain more similar terms. To combat this, document length is normalised and relevance is prioritised

Google has been doing this for over a decade. Maybe for over a decade, you have too

Things you should know before we start

Some concepts and systems you should be aware of before we dive in.

I don’t remember all of these and neither will you. Just try to enjoy yourself and hope that through osmosis and consistency you vaguely remember things over time.

TF-IDF stands for term frequency-inverse document frequency. It is a numerical statistic used in NLP and information retrieval to measure a term's relevance within a document corpus.

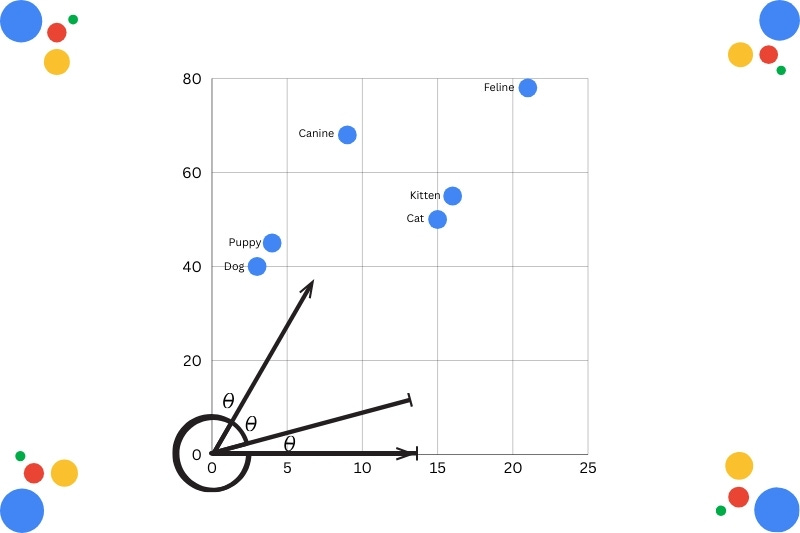

Cosine similarity measures the cosine of the angle between two vectors, ranging between -1 to 1. A smaller angle (closer to 1) implies higher similarity.

The bag-of-words model is a way of representing text data when modelling text with machine learning algorithms.

Feature extraction/encoding models are used to convert raw text into numerical representations that can be processed by machine learning models.

Euclidean distance measures the straight-line distance between two points in vector space to calculate data similarity (or dissimilarity).

Doc2Vec (an extension of Word2Vec), designed to represent the similarity (or lack of it) in documents as opposed to words.

What is the vector space model?

The Vector Space Model (VSM) is an algebraic model that represents text documents or items as ‘vectors.’ This representation allows systems to create a distance between each vector.

The distance calculates the similarity between terms or items.

Commonly used in information retrieval, document ranking, and keyword extraction, vector models create structure. This structured, high-dimensional numerical space, enables the calculation of relevance via similarity measures like cosine similarity.

Terms are assigned values. If a term appears in the document, its value is non-0. Worth noting that terms are not just individual keywords. They can be phrases, sentences and entire documents.

How does it work?

Once queries, phrases and sentences are assigned values, the document can be scored. It has a physical place in the vector space as chosen by the model.

Based on its score, documents can be compared to one another based on the inputted query. You generate similarity scores at scale. This is known as semantic similarity. Where a set of documents are scored and positioned in the index based on their meaning.

Not just their lexical similarity.

I know this sounds a bit complicated, but think of it like this:

Words on a page can be manipulated. Keyword stuffed. They’re too simple. But if you can calculate meaning (of the document), you’re one step closer to a quality output.

Why does it work so well?

Machines don’t just like structure. They bloody love it.

Fixed length (or styled) inputs and outputs create predictable, accurate results. The more informative and compact a dataset, the better quality classification, extraction and prediction you will get.

The problem with text is that it doesn’t have much structure. At least not in the eyes of a machine. It’s messy. This is why it has such an advantage over the classic Boolean Retrieval Model.

In Boolean Retrieval Models, documents are retrieved based on whether they satisfy the conditions of a query that uses Boolean logic. It treats each document as a set of words or terms and uses AND, OR and NOT operators to return all results that fit the bill.

Its simplicity has its uses, but cannot interpret meaning.

Think of it more like data retrieval than identifying and interpreting information. We fall into the term frequency (TF) trap too often with more nuanced searches. Easy, but lazy in today’s world.

Whereas the vector space model interprets actual relevance to the query and doesn’t require exact match terms. That’s the beauty of it.

It’s this structure that creates much more precise recall.

The transformer revolution (not Michael Bay)

Unlike Michael Bay’s series aimed at pubescent boys, the real transformer architecture replaced older, static embedding methods (like Word2Vec) with contextual embeddings.

While static models assigned one vector to each word, Transformers generate dynamic representations that change based on the surrounding words in a sentence.

And yes, Google has been doing this for some time. It’s not new. It’s not GEO. It’s just modern information retrieval that ‘understands’ a page.

I mean, obviously not. But you, as a hopefully sentient, breathing being, understand what I mean. But transformers, well, they fake it;

Transformers weight input by data by significance

The model pays more attention to words that demand or provide extra context

Let me give you an example.

‘The bat’s teeth flashed as it flew out of the cave.’

Bat is an ambiguous term. Ambiguity is bad in the age of AI.

But transformer architecture links bat with ‘teeth,’ ‘flew’ and ‘cave,’ signalling that bat is far more likely to be a bloodsucking rodent* than something a gentleman would use to caress the ball for a boundary in the world’s finest sport.

*No idea if a bat is a rodent, but it looks like a fucking rat with wings

BERT strikes back

BERT. Bidirectional Encoder Representations from Transformers. Shrugs.

This is how Google has worked for years. By applying this type of contextually aware understanding between the semantic relationships between words and documents. It’s a huge part of the reason why Google is so good at mapping and understanding intent and how it shifts over time.

BERT’s more recent updates (DeBERTa) allow words to be represented by two vectors - one for meaning and one for its position in the document. This is known as Disentangled Attention. It provides more accurate context.

Yep, sounds weird to me too.

Bert processes the entire sequence of words simultaneously. This means context is applied from the entirety of the page content (not just the few surrounding terms).

Synonyms baby

Launching in 2015, RankBrain was Google’s first deep learning system. Well, that I know of anyway. It was designed to help the search algorithm understand how words relate to concepts.

This was kind of the peak search era. Anyone could start a website about anything. get it up and ranking. Make a load of money. Not need any kind of rigour.

Halcyon days.

With hindsight, these days weren’t great for the wider public. Getting advice on funeral planning and commercial waste management from a spotty 23 year old’s bedroom in Halifax.

As new and evolving queries surged, Rankbrain and the subsequent neural matching was vital.

Then there was MUM. Google’s ability to ‘understand’ text, images and visual content across multiple languages simulatenously.

Combatting document length issues

Document length was an obvious problem 10 years ago. Maybe less. Longer articles, for better or worse, always did better. I remember writing 10,000 word articles on some nonsense about website builders and sticking it on a homepage.

Even then that was a shit idea…

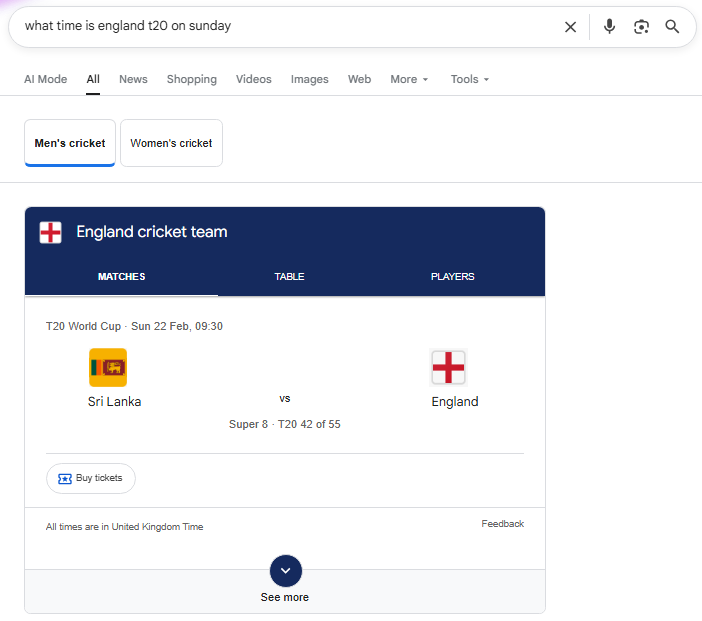

In a world where queries and documents are mapped to numbers, you could be forgiven for thinking that longer documents will always be surfaced over shorter ones.

Remember 10-15 years ago when everyone was obsessed when every article being 2,000 words.

‘That’s the optimal length for SEO.’

If you see another ‘what time is x’ 2,000 word article, you have my permission to fucking shoot me.

Longer documents will – as a result of containing more terms – have higher TF values. They also contain more distinct terms. These factors can conspire to raise the scores of longer documents

Hence why, for a while, they were the zenith of our shitty content production.

Longer documents can broadly be lumped into two categories:

Verbose documents that essentially repeat the same content (hello keyword stuffing my old friend)

Documents covering multiple topics, in which the search terms probably match small segments of the document, but not all of it

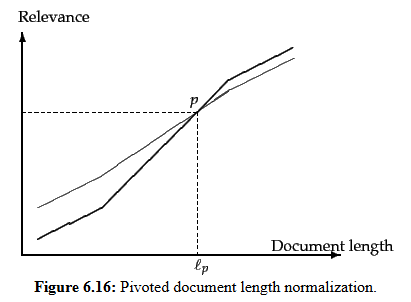

To combat this obvious issue, a form of compensation for document length is used, known as Pivoted Document Length Normalisation. This adjusts scores to counteract the natural bias longer documents have.

The cosine distance should be used because we do not want to favour longer (or shorter) documents, but to focus on relevance. Leveraging this normalisation prioritises relevance over term frequency.

It’s why cosine similarity is so valuable. It is robust to document length. A short and long answer can be seen as topically identical if they point in the same direction in the vector space.

Well, so what?

Great question.

Well, no one’s expecting you to understand the intricacies of a vector database. You don’t really need to know that databases create specialised indices to find close neighbours without checking every single record.

This is just for companies like Google to strike the right balance between performance, cost and operational simplicity.

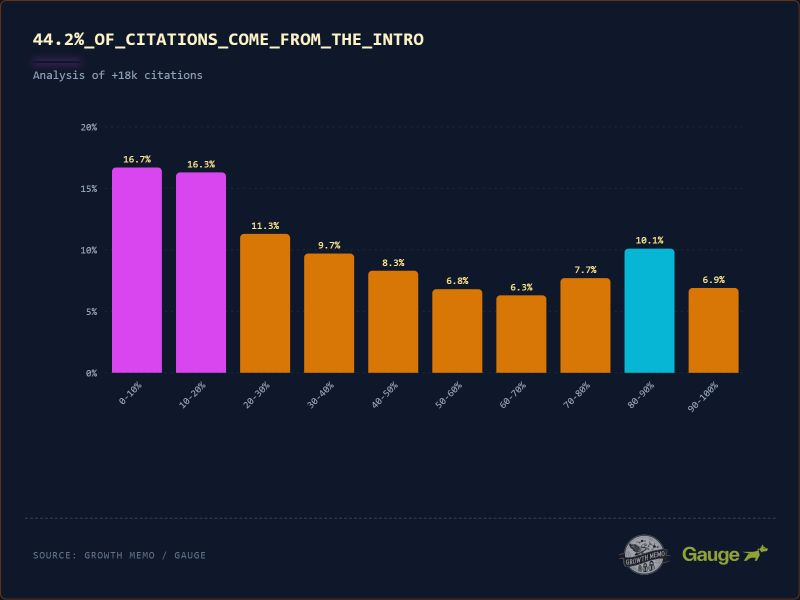

Kevin Indig’s latest, excellent research shows that 44.2% of all citations in ChatGPT originate from the first 30% of the text. The probability of citation drops significantly after this initial section, creating a ‘ski ramp’ effect.

Even more reason not to mindlessly create fucking massive documents because someone told you to.

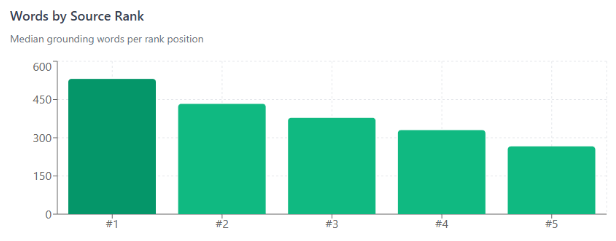

In ‘AI search,’ a lot of this comes down to tokens. According to Dan Petrovic’s always excellent work, each query has a fixed grounding budget of approximately 2,000 words total, distributed across sources by relevance rank.

In Google at least. And your rank determines your score. So get SEO-ing.

Metehan’s study on what 200,000 Tokens Reveal About AEO/GEO really highlights how important this is. Or will be. Not just for our jobs, but biases and cultural implications.

As text is tokenised (compressed and converted into a sequence of integer IDs), this has cost and accuracy implications.

Plain English prose is the most token-efficient format at 5.9 characters per token. Let’s call it 100% relative efficiency. A baseline.

Turkish prose has just 3.6. This is 61% as efficient.

Markdown tables 2.7. 46% as efficient.

Languages are not created equal. In an era where capex costs are soaring and AI firms have struck deals I’m not sure they can cash, this matters.

Top tips

Well, as Google has been doing this for some time, the same things should work across both interfaces.

Answer the fucking question. My god. Get to the point. I don’t care about anything other than what I want. Give it to me immediately (spoken as a human and a machine).

So frontload your important information. I have no attention span. neither do transformer models.

Disambiguate. Entity optimisation work. Connect the dots online. Claim your knowledge panel. Authors, social accounts, structured data, building brands and profiles.

Excellent E-E-A-T. Deliver trustworthy information in a manner that sets you apart from the competition.

Create keyword rich internal links that help define what the page and content is about. Part disambiguation. Part just good UX.

If you want something focused on LLMs, be more efficient with your words.

Using structured lists can reduce token consumption by 20-40% because they remove fluff. Not because they’re more efficient*.

Use commonly known abbreviations to also save tokens

*Interestingly they are less efficient than traditional prose

Almost all of this is about giving people what they want quickly and removing any ambiguity. In an internet full of shit, doing this really, really works.

Last bits

There is some discussion around whether markdown for agents can help strip out the fluff from HTML on your site. So agents could bypass the cluttered HTML and get straight to the good stuff.

How much of this could be solved by having a less fucked up approach to semantic HTML I don’t know. Anyway, one to watch.

Very SEO. Much AI.

Great read, thanks for this. I've been in SEO for almost 10 years and a couple years ago, I picked up a book called Entity-Oriented Search by Krisztian Balog. Much of the math is over my head but the concepts are sound and consistent with your articles. With all the hype of AI/SEO/GEO/AEO/etc etc I find it increasingly beneficial to understand the HOW it works so I can inform the WHY we are doing what we are doing. Appreciate this.