Information Retrieval Part 1: Disambiguation

In an era of smoke and mirrors, spam problems, fake authors and PR experts, demonstrating real clarity to humans and machines is everything

TL;DR

Disambiguation is the process of resolving ambiguity and uncertainty in data. It’s crucial in modern day SEO and information retrieval

Search engines and LLMs reward content that is easy to ‘understand',’ not content that is necessarily best

The clearer and better structured your content, the harder it is to replace.

You have to reinforce how your brand and products are understood. When grounding is required, models favour sources they recognise from training data

The internet has changed. Channels have begin to homogenise. Google is trying to become something of a destination and the individual content creator is more powerful than ever.

Oh and we don’t need to click on anything.

But what makes for great content hasn’t changed. AI and LLMs haven’t changed what people want to consume. They’ve changed what we need to click on. Which I don’t necessarily hate.

As long as you’ve been creating well structured, engaging, educational/entertaining content for years. All this chat of chunking is a bit smoke and mirrors for me.

“If it walks like a duck and talks like a duck, it’s probably a grifter selling you link building services or GEO.”

However, it is absolutely not all bullshit. Concepts like ambiguity are a more destructive force than ever. If you permit a quick double negative, you cannot not be clear.

The clearer you are. The more concise. The more structured on and off page. The better chance you stand. There’s no place for ambiguous phrases, paragraphs and definitions.

This is known as disambiguation.

What is disambigation?

Disambiguation is the process of resolving ambiguity and uncertainty in data. Ambiguity is a problem in the modern day internet. The deeper down the rabbit hole we go, the less diligence is paid towards accuracy and truth. The more clarity your surrounding context provides, the better.

It is a critical component of modern day SEO, AI, Natural Language Processing (NLP), and information retrieval.

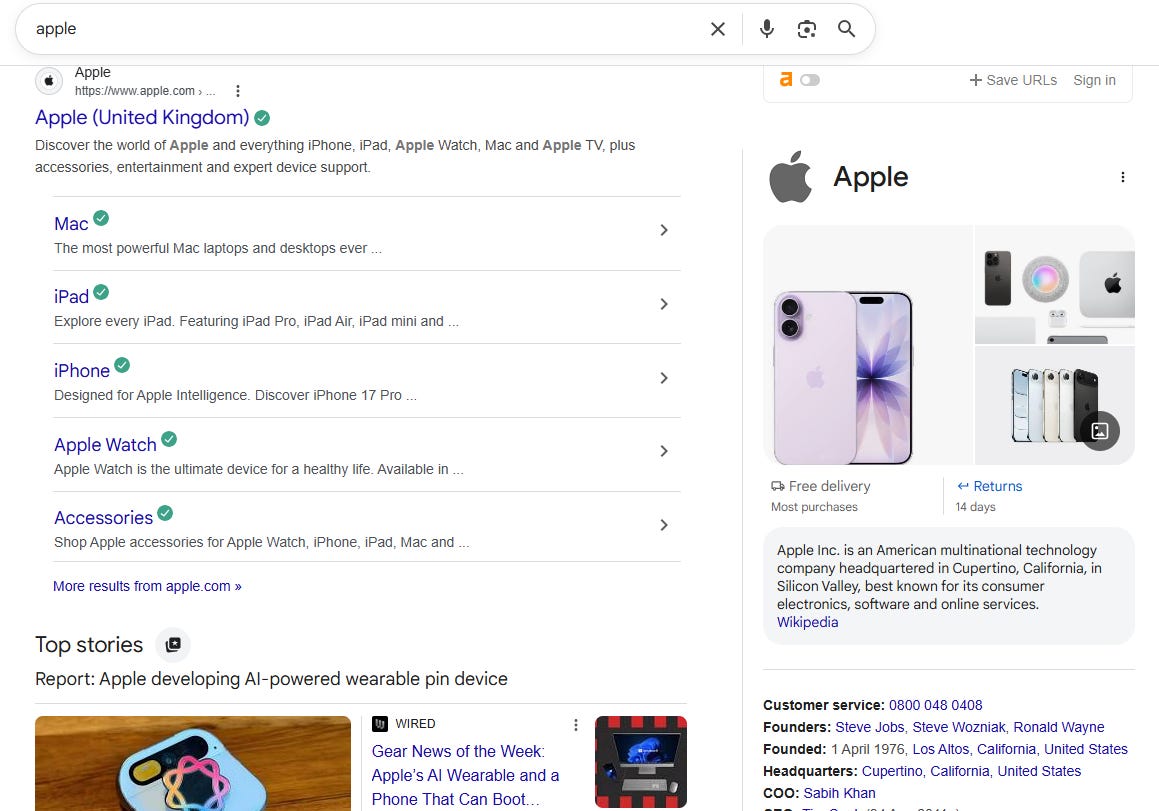

This is an obvious and overused example, but consider a term like apple. The intent and understanding behind it is vague. We don’t know whether people mean the company, the fruit, the daughter of a batshit, brain dead celebrity.

Years ago, this type of ambiguous search would’ve yielded a more diverse set of results. But thanks to personalisation and trillions of stored interactions, Google knows what we all want. Scaled user engagement signals and an improved understanding of intent and keywords, phrases and context are fundamental here.

Yes, I could’ve thought of a better example, but I couldn’t be arsed. You see my point.

Why should I care?

Modern day information retrieval requires clarity. The context you provide really matters when it comes to a confidence score systems require when pulling the ‘correct’ answer.

And this context is not just present in the content.

There is a significant debate about the value of structured data in modern day search and information retrieval. Using structured data like sameAs to signify exactly who this author is and tying all of your company social accounts and sub-brands together can only be a good thing.

The argument isn’t that this has no value. It makes sense.

It’s whether Google needs it for accurate information parsing anymore

And whether it has value to LLMs outside of well structured HTML.

Ambiguity and information retrieval have become incredibly hot topics in data science. Vectorisation - representing documents and queries as vectors - helps machines understand the relationships between terms.

It allows models to effectively predict what words should be present in the surrounding context. It’s why answering the most relevant questions and predicting user intent and ‘what’s next’ has been so valuable for a long time in search.

See Google’s Word2Vec for more information.

Google has been doing this for a long time

Do you remember what Google’s early, and official, mission statement regarding information was?

“Organise the world’s information and make it universally accessible and useful”.

Their former motto was “don’t be evil.” Which I think in more recent times they may have let slide somewhat. Or conveniently hidden it.

“Oh sorry, somebody left a pot plant in front of don’t. Sorry, sorry we’ll get right back on that.”

Organising the world’s information has become so much more effective thanks to advances in information retrieval. Originally Google thrived on straightforward keyword matching. Then they moved to tokenisation.

Their ability to break sentences into words and match short-tail queries was revolutionary. But as queries advanced and intent became less obvious, they had to evolve.

The advent of Google’s Knowledge Graph was transformational. A database of entities that helped create consistency. It created stability and improved accuracy in an ever changing web.

Now queries are rewritten at scale. Ranking is probabilistic instead of deterministic and in some cases, fan out processes are applied to create an all-encompassing answer. It’s about matching the user’s intent at the time. It’s personalised. Contextual signals are applied to give the individual the best result for them.

Which means we lose predictability depending on temperature settings, context and inference path. There’s a lot more passage-level retrieval going on.

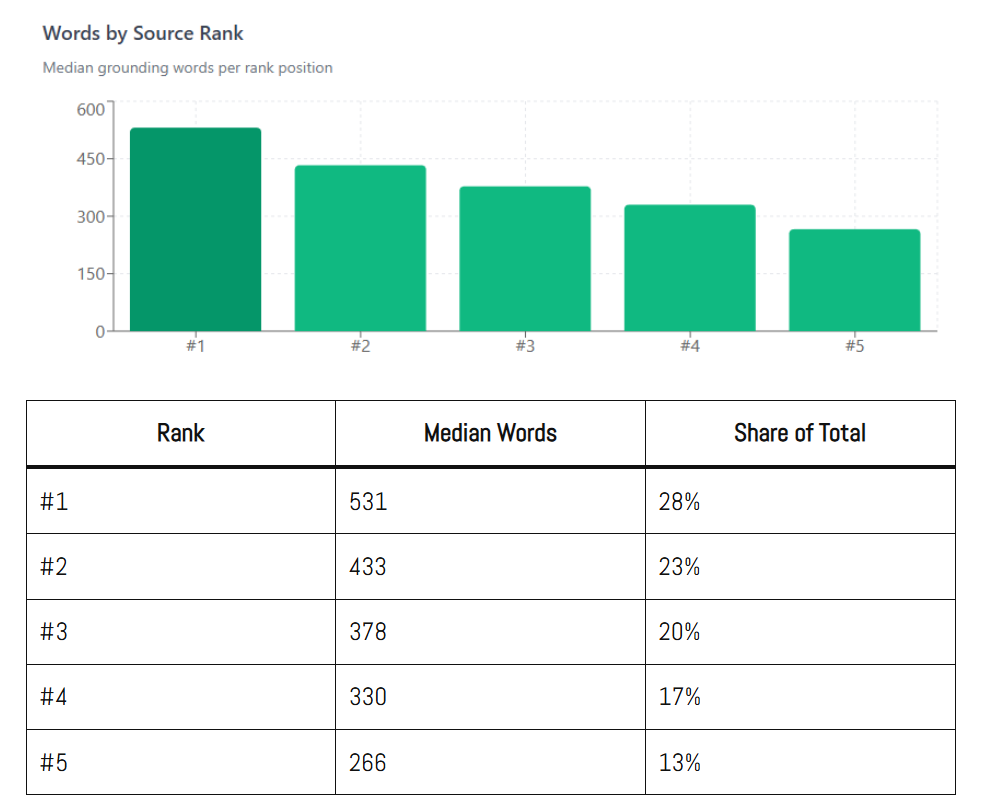

Thanks to Dan Petrovic, we know that Google doesn’t use your full page content when grounding its Gemini-powered AI systems. Each query has a fixed grounding budget of approximately 2,000 words total, distributed across sources by relevance rank.

The higher you rank in search, the more budget you are allotted. Think of this context window limit like crawl budget. Larger windows enable longer interactions, but cause performance degradation. So they have to strike a balance.

Hummingbird, BERT, RankBrain - foundational semantic understanding

These older algorithm shifts were pivotal in making Google’s systems treat language and meaning differently.

Hummingbird (2013) Helped Google identify entities and things quickly, with greater precision. This was an step toward semantic interpretation and entity recognition. Think of keywords at a page level. Not query level.

RankBrain (2015) To combat the ever-increasing and never before seen queries, Google introduced machine learning to interpret unknown queries and relate them to known concepts and entities.

RankBrain was built on the success of Hummingbird’s semantic search. By mastering NLP systems, Google begin mapping words to mathematical patterns (vectorisation) to better serve new and ever-evolving queries.

These vectors help Google ‘guess’ the intent of queries it has never seen before by finding their nearest mathematical neighbors.

The Knowledge Graph Updates

In July 2023, Google rolled out a major Knowledge Graph update. I think people in SEO called it the Killer Whale Update, but I can’t remember who coined the phrase. Or why. Apologies. It was designed to accelerate the growth of the graph and reduce its dependence on third party sources like Wikipedia.

As somebody who has spent a long time messing around with entities, I can really understand why. It’s a giant, expensive time-suck.

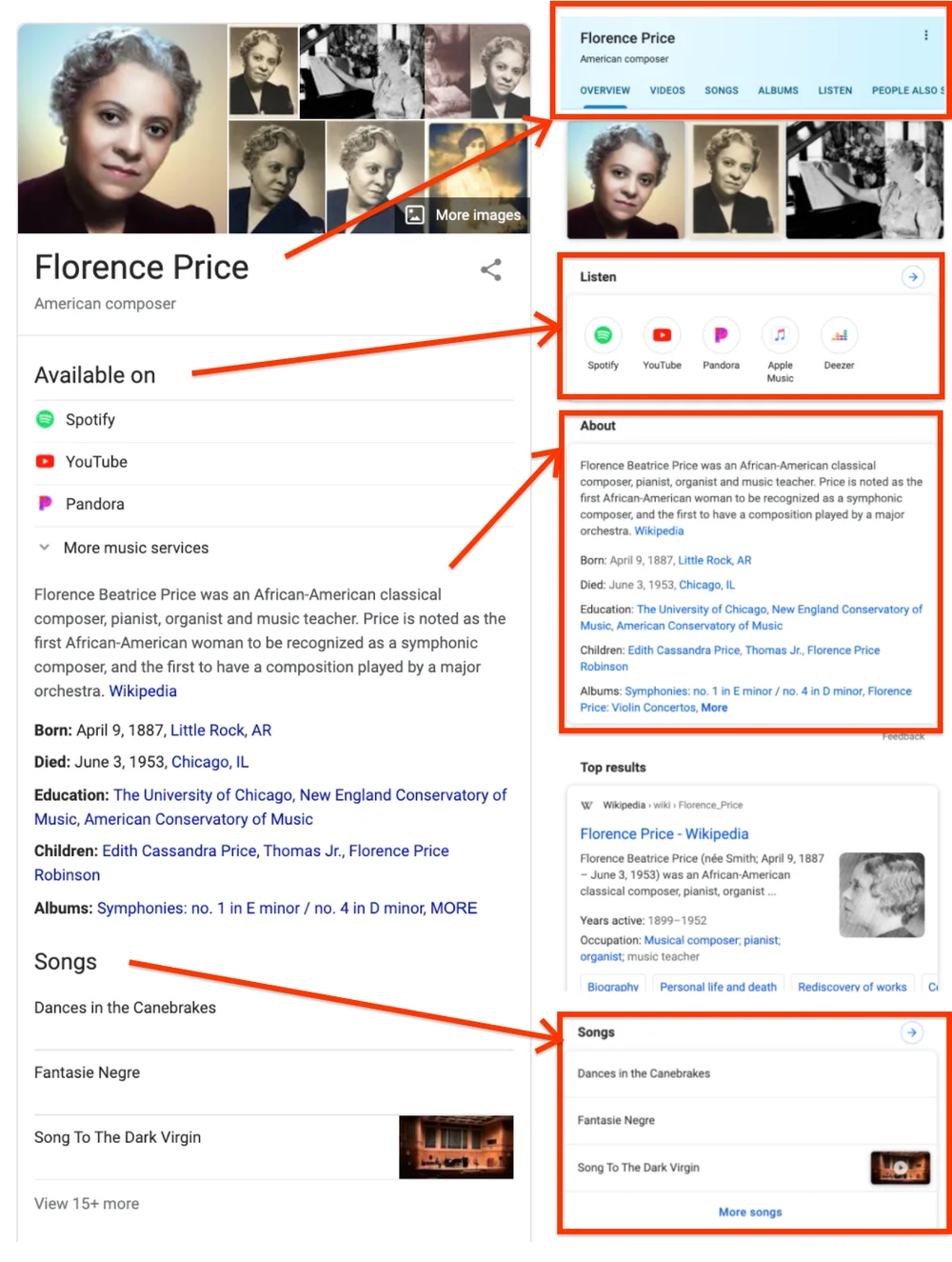

It explicitly expanded and restructured how entities are recognised and classified in the Knowledge Graph. Particularly person entities with clear roles such as author or writer.

The number of entities in the Knowledge Vault increased by 7.23% in one day to over 54 billion

In July 2023, the number of Person entities tripled in just four days

All of this is an effort to combat AI slop, provide clarity and minimise misinformation. To reduce ambiguity and to serve content where a living, breathing expert is at the heart of it.

Worth checking whether you have a presence in the Knowledge Graph here. If you do and can claim a Knowledge Panel, do it. Cement your presence. If not, build your brand and connectedness on the internet.

What about LLMs & AI Search?

There are two main ways LLMs retrieve information;

By accessing their vast, static training data

Using RAG (a type of grounding) to access external, up-to-date sources of information

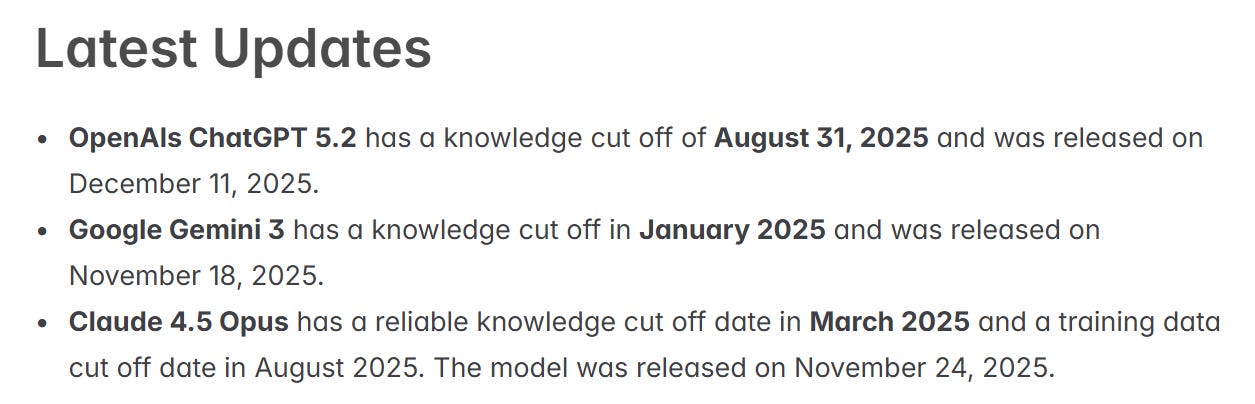

RAG is why traditional Google Search is still so important. The latest models no longer train on real-time data and lag a little behind. Before the primary model dives in to respond to your desperate need for companionship, a classifier determines whether real time information retrieval is necessary.

They cannot know everything and have to employ RAG to make up for their lack of up to date information (or verifiable facts through their training data) when retrieving certain answers. Essentially trying to make sure they aren’t chatting shite.

Hallucinating if you’re feeling fancy.

So each model needs its own form of disambiguation. Primarily, this is achieved via;

Context aware query matching. Seeing words as tokens and even reformatting queries into more structured formats to try and achieve the most accurate result. This type of query transformation leads to fan out and embeddings for more complex queries.

RAG architectures. Accessing external knowledge when an accuracy threshold isn’t reached.

Conversational agents. LLMs can be prompted to decide whether to directly answer a query or to ask the user for clarification if they don’t meet the same confidence threshold.

Remember, if your content isn’t accessible to search retrieval systems it can’t be used as part of a grounding response. There’s no separation here.

What should you do about it?

If you have wanted to do well in search over the last decade, this should’ve been a core part of your thinking. Helpful content rewards clarity.

Allegedly. It also rewards nerfing smaller sites out of existence.

Remember that being clever isn’t better than being clear.

Doesn’t mean you can’t be both. Great content entertains, educates, inspires and enhances.

Use your words

You need to learn how to write. Short, snappy sentences. Help people and machines connect the dots. If you understand the topic, you should know what people want or need to read next almost better than they do.

Use verifiable claims

Cite your sources

Showcase your expertise through your understanding

Stand out. Be different. Add information to the corpus to force a mention and/or citation.

Structure the page effectively

Write in clear, straightforward paragraphs with a logical heading structure. You really don’t have to call it chunking if you don’t want to. Just make it easy for people and machines to consume your content.

Answer the question. Answer it early.

Use summaries or hooks.

Tables of contents.

Tables, lists and actual structured data. Not schema. But also schema.

Make it easy for users to see what they’re getting and whether this page is right for them.

Intent

Lots of intent is static. Commercial queries always demand some level of comparison. Transactional queries demand some kind of buying or sales process.

But intent changes and millions of new queries crop up every day.

So you need to monitor the intent of a term or phrase. News is probably a perfect example. Stories break. Develop. What was true yesterday may not be true today. The courts of public opinion damn and praise in equal measure.

Google monitors the consensus. Tracks changes to documents. Monitors authority and - crucially here - relevance.

You can use something like Also Asked to monitor intent changes over time.

The technical layer

For years, structured data has helped resolve ambiguity. But we don’t have real clarity over its impact on AI search. Cleaner, well structured pages are always easier to parse and entity recognition really matters.

sameAs properties connect the dots with your brand and social accounts.

It helps you explicitly state who your author is and crucially isn’t.

Internal linking helps bots navigate across connected sections of your website and build some form of topical authority.

Keep content up to date, with consistent date framing - on page, structured data and sitemaps

If you like messing around with the Knowledge Graph (who the hell doesn’t), you can find confidence scores for your brand.

According to Google’s very own guidelines, structured data provides explicit clues about a page’s content, helping search engines understand it better.

Yes yes, it displays rich results etc. But it removes ambiguity.

Entity matching

I think this ties everything together. Your brand, your products, your authors, your social accounts.

What you say about your brand matters now more than ever.

The company you keep (the phrases on a page)

The linked accounts

The events you speak at

Your about us page(s)

All of it helps machines build up a clear picture of who you are. If you have strong social profiles, you want to make sure you’re leveraging that trust.

At a page level, title consistency, using relevant entities in your opening paragraph, linking to relevant tag and articles page and using a rich, relevant author bio is a great start.

Really, just good, solid SEO. Don’t @ me.

PSA: Don’t be fucking boring. You won’t survive.